Introduction

In today’s fast-evolving digital landscape, organizations increasingly rely on scalable, secure, and high-performance APIs to power AI-enabled applications. In a recent project focused on delivering advanced machine learning workflows and automation, especially in the Life Sciences domain, we encountered several architectural challenges while scaling AI services to meet production demands.

The solution needed to support end-to-end use cases such as literature review, data extraction, and document processing, all while automating manual tasks, improving research throughput, and supporting regulatory compliance. These requirements called for powerful infrastructure components like Named Entity Recognition (NER), document summarization, and semantic search driven by modern AI models.

As demand for seamless AI integration grows, the need for an infrastructure that could deliver security, scalability, and consistent performance becomes clear. This blog explores the architecture and engineering principles behind building such a system, highlighting key technologies, autoscaling strategies, and optimization methods that made it robust and production-ready.

The Challenge: Building a Scalable and Secure AI-Powered API

Delivering AI services at scale introduces a distinct set of architectural challenges, especially when supporting real-time natural language processing and computer vision workloads. The key challenges include:

- Low Latency AI Model Execution: Making sure AI models respond quickly for a good user experience.

- Handling Unpredictable Traffic Surges: Handling sudden increases in traffic without slowing down.

- API Security: Keeping the API secure from hackers and unauthorized access.

- Database Optimization: Managing our database efficiently to save costs and perform well.

To overcome these challenges, we implemented a microservices-based API architecture with intelligent autoscaling and caching mechanisms.

Architecture Overview: Scalable and Resilient by Design

The architecture consists of multiple interconnected components designed for scalability, resilience, and performance. The key components include:

- Microservices: Modular services that can scale independently.

- API Gateway & Authorization Mechanism: Securely handles authentication and API request routing.

- Scalable Database & Search Indexing: Provide efficient data storage and retrieval.

- Caching Layer: Reduces database load and improves API response times.

- Autoscaling Mechanisms: Dynamically scales application resources based on real-time traffic patterns.

This blog provides an in-depth analysis of how these components work together to build a high-performance API infrastructure.

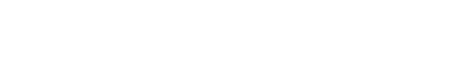

Authentication & Authorization Mechanism

To secure API access, an authorization mechanism was implemented to evaluate incoming requests. The architecture uses a token-based validation process integrated into the API gateway. When a request is received:

- The token is decoded and validated

- Claims are extracted

- Access is granted or denied based on IAM policies

This approach enables flexible authentication, integrating seamlessly with various identity providers.

Authentication and Authorization Mechanism

Web Application Firewall (WAF)

WAF acts as the first layer of defense, protecting our APIs from common web threats such as SQL injection, cross-site scripting (XSS), and distributed denial-of-service (DDoS) attacks. The WAF inspects incoming traffic, applying rules to block malicious requests before they reach the API Gateway. This ensures only legitimate requests are processed, enhancing overall security.

API Gateway

The API Gateway serves as the entry point for all API requests. It enables routing, throttling, and authentication while providing a managed solution to handle millions of requests per second. With the API Gateway, we enforce:

- Rate limiting to prevent abuse.

- Caching to reduce the repeated processing of similar requests.

- Request validation to ensure input integrity before forwarding to backend services.

Deep Dive into Key Technologies

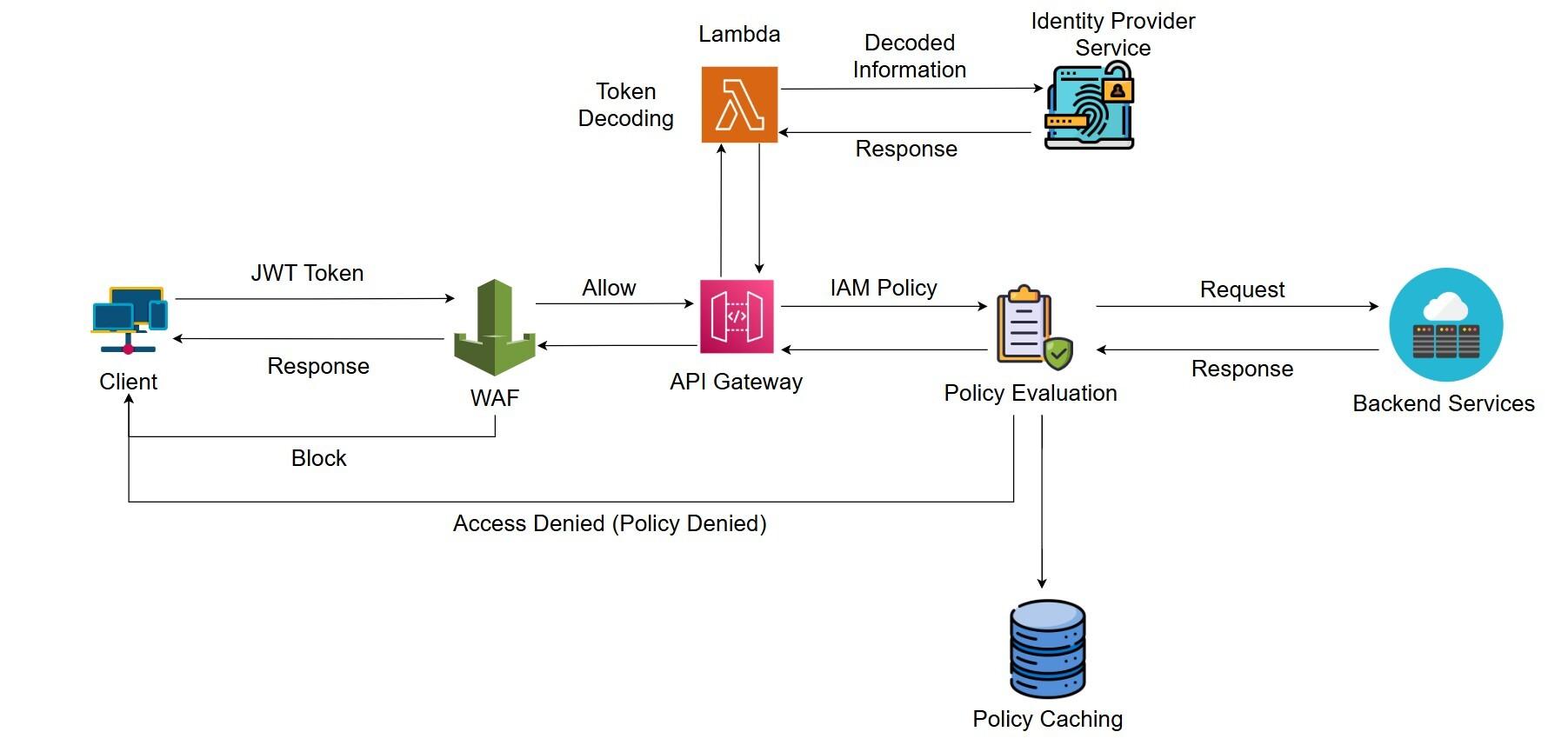

Microservices Architecture: Building Block of Scalability

We follow a standard microservice architecture to ensure scalability, modularity, and ease of maintenance. Each service runs independently and communicates through APIs, allowing for flexibility in development and deployment. Key benefits include:

- Independent scaling using decoupled services.

- Faster deployments with isolated changes.

- Improved fault tolerance by preventing system-wide failures.

- Technology-agnostic approach, allowing teams to choose the best tool for each service.

- Enhanced security, as services have clearly defined responsibilities and limited exposure.

Microservices Architecture

Best Practices for Microservices

To optimize our microservices-based API, we adhere to the following best practices:

- Domain-driven design (DDD): Ensure each microservice has a well-defined business capability and clear ownership.

- Centralized configuration management: Use AWS Secrets Manager to manage properties across services dynamically.

- Inter-service communication: Utilize REST APIs for synchronous calls and asynchronous messaging via AWS SQS for event-driven processing.

- Observability: Utilize AWS CloudWatch and other AWS services for monitoring and logging.

- Service discovery: Leverage AWS NLB and Kubernetes service discovery for dynamic service registration and load balancing.

Scalable AI Database Architecture

A managed, scalable relational database was chosen to support real-time workloads. Key features include:

- Automatic backups and snapshots for disaster recovery.

- Read replicas to distribute load and improve performance.

- Auto-scaling storage to handle growing data volumes efficiently.

Efficient Search & Indexing

An advanced search system is integrated into our architecture for real-time search and analytics. It enhances our API efficiency by enabling:

- Fast full-text search across large datasets.

- Distributed indexing to scale horizontally.

- Advanced query capabilities for structured and unstructured data.

Caching for Performance & Reduced DB Connections

A caching layer enhances API performance by storing frequently accessed data in memory. This reduces database queries and improves response times for repeated requests. Key optimizations include:

- Session caching to store user authentication tokens.

- Response caching to avoid redundant API calls.

- Rate limiting to manage API abuse efficiently.

- Data caching for frequently accessed database queries reduces connection overhead.

We leverage Redis for our caching layer due to its speed and efficiency.

Autoscaling with Kubernetes and Lambda

Kubernetes provides a robust solution for horizontal scaling by automatically adjusting microservice instances, while AWS Lambda allows for efficient handling of event-driven tasks like image processing, ensuring both cost-efficiency and high availability.

To handle surges in traffic, we implemented a multi-faceted approach:

- Kubernetes-Based Autoscaling: Microservices are deployed on Kubernetes, enabling us to leverage its powerful autoscaling capabilities. We dynamically adjust the number of pod replicas based on real-time CPU and memory utilization.

- Lambda for Event-Driven Scaling: For specific tasks, such as image processing, we utilize AWS Lambda functions. These serverless functions automatically scale based on the number of incoming events.

- Load Balancing: Traffic is distributed evenly across multiple instances using load balancers.

- Read Replicas: Read replicas are used to reduce the load on the primary database.

- Rate Limiting and Request Queuing: Rate limiting and request queuing are used to prevent system overload.

Future Roadmap

Looking forward, the architecture will incorporate AI-based predictive scaling, enabling the system to anticipate traffic surges before they happen. Further expansion of serverless functions is planned to improve cost-efficiency and reduce operational overhead.

Conclusion

By combining industry best practices—including WAFs, API Gateways, Kubernetes-based autoscaling, microservices, and caching layers—we built a secure, scalable infrastructure ready for production-grade AI workloads.

This architecture delivers high availability, optimal performance, and cost-efficiency, making it ideal for modern cloud-native applications. With continuous monitoring and intelligent scaling, our platform remains resilient and responsive under all conditions.

Athiselvam N is a skilled CapeStart Senior Software Engineer who builds scalable, high-performance apps using Spring Boot, Angular, Node.js, MySQL, MongoDB, and Elasticsearch. He specializes in microservices, APIs, LLM, OpenAI, and Agentic AI, with expertise in Python, Redis, API Gateway, AWS, and Kubernetes. Passionate about innovation, he delivers impactful solutions across domains.