Like many challenges, it began with an user who continued receiving the wrong videos on her feed. It appeared to be a mere glitch in our recommendation system, but as we got deeper into it, the more we found that there was some concealed bias in our code, and it was just causing unfairness in our setup. It was not only a question of bad user experience, but of equity and credibility.

Since then, AI ethics has no longer been a blank whiteboard discussion but an actual issue we have had to resolve at the moment. It is not difficult to produce AI with a lot of power; however, to create it in a fair, transparent, and trustworthy way is quite a different matter.

That is why AI ethics became a reality, not a debatable issue, but a reality we had to address in our lives. It is one thing to make AI smart, and another thing to make it just and honest. Our adventure and the system we created to maintain AI ethics, thus leaving the tech smart but kind, is the subject of this post.

The Dilemma: Why AI Ethics is even More Important Now

AI prejudice is not typically bad, but simply creeps in because of the ancient, skewed information, or it is our own prejudices that we silently coded into our code. As with when a loan-checking AI is trained on biased past data, it may be subconsciously biased towards certain groups and not even realize it.

This poses a threefold difficulty:

- Fairness – what can we do to ensure that AI is fair to all people? What does fairness even mean? It might be that all groups receive an equal approval rate, or that all people have an equal opportunity of receiving the same award. It is very important to choose the correct number to quantify this.

- Bias Mitigation – How can we identify and eliminate bias? That’s tricky. Overfixing may end up doing more harm to the very people you are trying to assist, or the AI may become less accurate.

- Explainability – When the AI makes a decision that is big (who gets a loan or a diagnosis), we will want to know why it has made such a choice. Any mysterious black-box model, even if a smart one, is a betrayal, and it is difficult to correct a wrong decision.

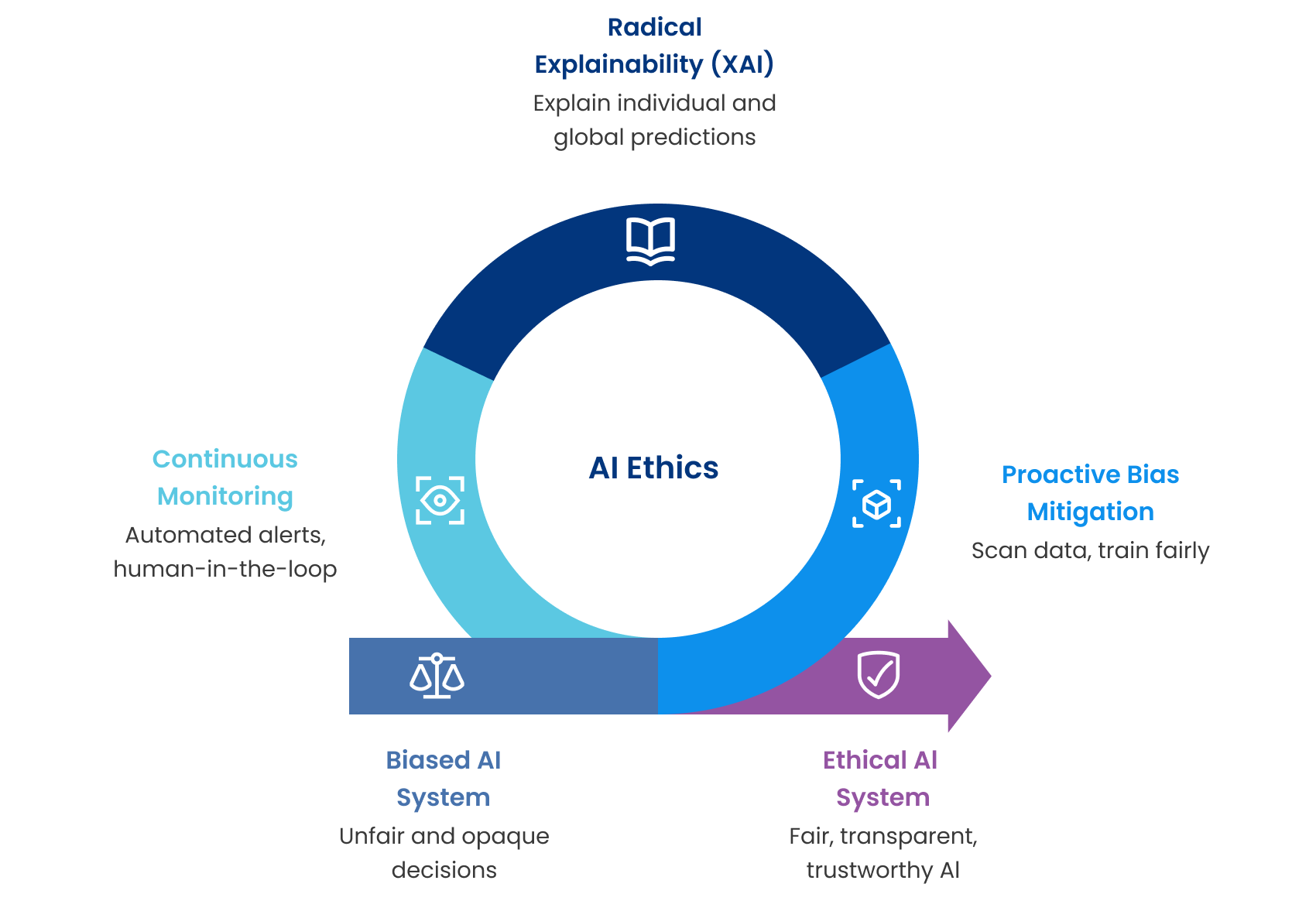

Our Proposal: Three-Step Guide to Ethical AI

We addressed it in a three-step strategy and pushed instruments and approaches directly into our AI process.

Step 1: Fairness and Bias Proactive Mitigation

Fairness is not a post-hoc activity. We think much earlier before we start to code it.

The data is the starting point of data prep! We scan the data with Fairlearn and visualize how the data is distributed between objects, such as location or age. When it is not even, we spin up SMOTE so that it becomes even before we begin training.

We train and play fair training tricks. The GridSearch provided by Fairlearn assists us in searching through a vast number of models in order to identify one that is not only accurate but also fair. As an example, using a moderation tool, we ensured that it did not excessively flag items by non-native English speakers, which is typical of language models.

We can adjust the output even without retraining. Valuing thresholds among alternative groups enables results to remain fair, and it is straightforward with ThresholdOptimizer of Fairlearn.

Step 2: Radical Explainability (XAI)

We believe that we cannot trust a decision that we can not explain. This makes us then use the tools that peer into the black box.

Local Explanations – We have to explain an individual prediction; SHAP is our choice. SHAP provides a force plot of the contribution of each factor. That is why when somebody asks you, Why has my loan been denied? We may say that, because you were highly rated in debt-to-income, and you have a short credit history.

Explanations Worldwide – Have a look at the entire model and apply EBM, an interpretable machine learning model, provided by InterpretML, which preserves accuracy and allows us to understand the impact of every factor on the predictions. It even showed us that the time of day was more vital in our recommendations than we had imagined, and cool new time-based additions were implemented.

Step 3: Monitoring and Governance Continuously

Step three: keep watch. Ethical AI cannot be considered a single solution; representations evolve, and new biases appear.

Automated monitoring fairness and explainability stats are found in our CI/CD. We configured Alibi Detect to send alerts in case of parity drop or when predictions in a group begin to vary.

Human-in-the-loop – We insert real people in the loop with the most sensitive AI. Any low-confidence or high-impact decision that the system identifies is reviewed by a diverse squad.

Lessons Learned and Benefits

The incorporation of this model of ethics has not merely been an issue of doing what is right. It has literally changed the game with us.

Higher User Trust: By explaining to our users the rationale behind the actions of our AI, we have witnessed a genuine increase in both individual satisfaction and trust among users, which can be visible in the numbers that have risen, and customers feel more at ease using our AI.

Better Model Performance: This has increased our accuracy by a lot because tackling bias has required us to excavate deeper to develop better features and create stronger models.

Less Risk: A preemptive response to fairness and bias will help the organization stay ahead of the regulatory burden and reduce the possibility of reputational damage.

We have found out that it is impossible to have a single, universal definition of what fairness is and that contextual aspects are what matter the most. Also, we have come to understand that the early intervention of the heterogeneous community of stakeholders, including engineering and product experts, legal and social science experts, etc., is needed to discover the possible ethical blind spots.

Conclusion: How to create an AI System that Wins Trust

The user feedback story generated has radically changed the AI development strategy. Ethical considerations are now not seen as restrictions, but rather as triggers to creative engineering. The tools and frameworks provided are a partial solution, although the substantive work is a culture of responsibility, where every engineer is enabled to ask difficult questions and create an AI system that is not just strong but also just right. The ride continues, and we are dedicated to the continued development of this ride, one line of code, one model, and one user experience at a time.