The pace of change in the AI world is staggering, so much so that it’s not always easy to keep up. That’s why we’ve put together this brief guide on the differences between the two newest (as of July 2024) additions to OpenAI’s GPT family: GPT4 Turbo and GPT4o (Omni).

Let’s examine what makes each model useful, how they compare to each other, and some of the tasks they’re best suited to perform.

What is GPT4?

Launched: March 2023

First, let’s quickly review the basics of GPT4 since it’s the bedrock on which those two models are built. GPT4 can process text and images and can better comprehend context, provide more accurate responses, and use reasoning than its predecessor, GPT 3.5. GPT4 is also relatively expensive to operate from a computational perspective.

What is GPT4 Turbo?

Launched: November 2023

GPT4 Turbo features (as its name implies) faster response times using less computing resources than the original GPT4, making it cheaper to run for developers and other users. It has a knowledge cutoff of April 2023 and uses a 128k context window (which OpenAI says is the equivalent of 300 pages of text that can be used in a single prompt).

What is GPT4o (Omni)?

Launched: May 2024

The newest iteration of the GPT4 family, GPT4o, has vastly expanded multimodal capabilities and can process text, audio, images, and video. It can also generate outputs in all four mediums. OpenAI research says GPT4o is much faster than Turbo, can process audio nearly as fast as the human brain, and can remember objects and events.

“GPT-4o reasons across voice, text and vision” explained OpenAI CTO Mira Murati during an online presentation quoted in TechCrunch this past spring. “And this is incredibly important, because we’re looking at the future of interaction between ourselves and machines.”

Performance Comparison: GPT4 Turbo vs. GPT4o

When you get right down to it, comparing GPT4 Turbo vs. GPT4o isn’t that fair of a fight: OpenAI itself says Omni is 2x faster, 50% cheaper, and has 5x higher rate limits than Turbo. Turbo costs $10/1M input tokens and $30/1M output tokens, while Omni costs $5/1M input tokens and $15/1M output tokens.

Omni helps power a vastly improved ChatGPT that can interact naturally using voice interactions. It can also answer direct questions about what’s happening in photos or videos and perform all this in around 50 languages.

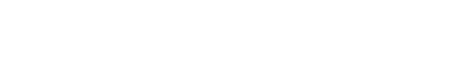

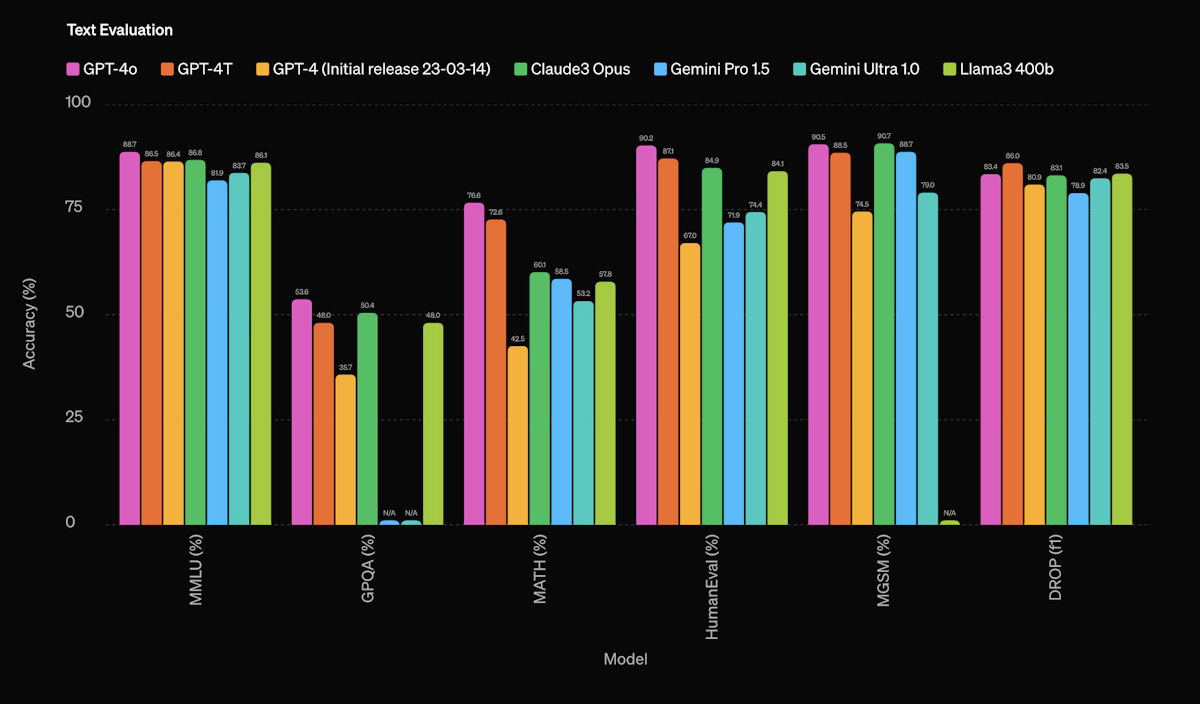

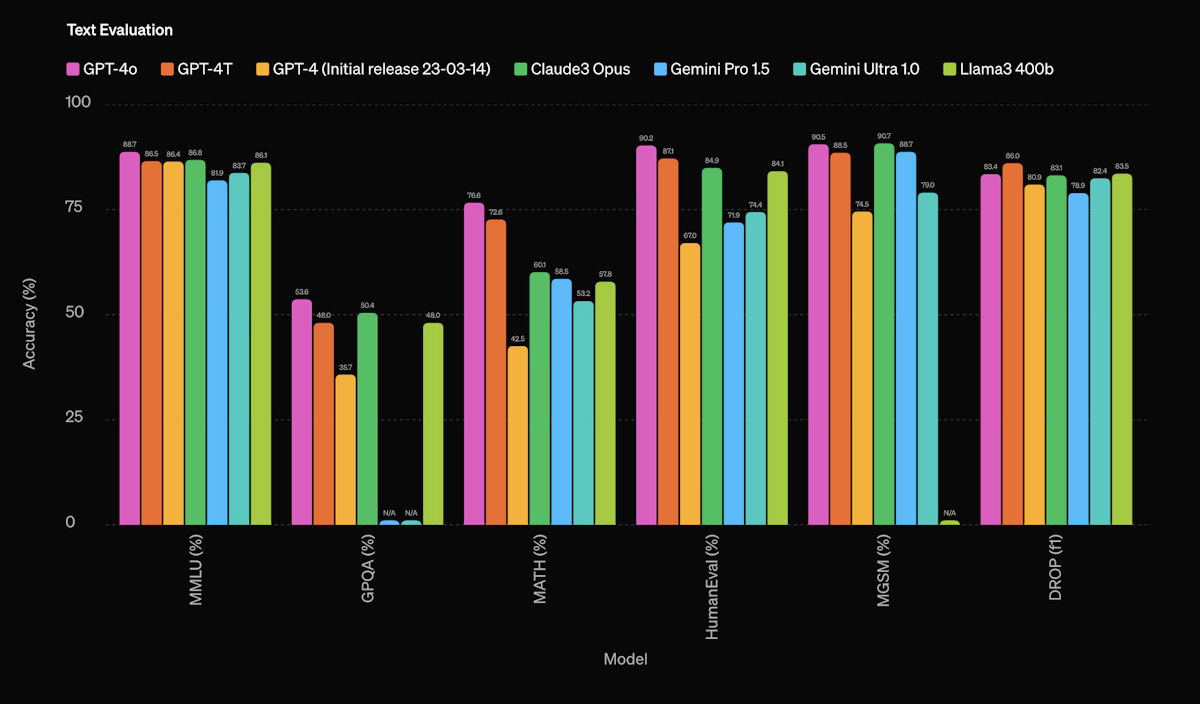

Source: OpenAI

Here’s how the two models stack up head-to-head:

|

GPT4 Turbo |

GPT4o |

|

|---|---|---|

| Latency | Higher | Lower |

| Throughput | 20 tokens per second | 109 tokens per second |

| Multimodality | Text, images | Text, images, audio, video |

| Data extraction/classification | Slower and less precise | Faster and more precise |

| Vision evaluation (MMMU) | 63.1 | 69.1 |

| Customer support ticket resolution | 83.33% precision | 88% precision |

| Verbal reasoning | 50% accuracy | 69% accuracy |

SOURCES: klu.ai, Eva Kaushik

While Omni clearly outperforms Turbo in most tasks, both models still need improvement in complex data extraction tasks, word manipulation, spatial reasoning, and pattern recognition.

Interestingly, one analysis showed that while Omni outperformed Turbo in long context utilization—especially at lower context lengths—the two models performed identically at the 2,000 context length and had mixed results at intermediate context lengths. GPT4o was superior in a comparison at higher context lengths of up to 31,500, but only slightly.

CapeStart’s Internal Testing of Turbo vs. Omni

Overall, machine learning experts say Omni is the better choice for applications where fast reasoning and natural language is required, such as chatbots, a conclusion that is supported by CapeStart’s recent internal testing.

Other results of CapeStart’s testing of Omni vs. Turbo:

Cohesiveness: When compared to Turbo, Omni shows better cohesiveness with a better-structured summary.

Summarization: Omni includes numerical values (such as percentage and p-values) in the summary. Turbo does not. This is largely thanks to Omni’s ability to understand table data.

Categorization: Omni can categorize content based on complex custom requests consisting of multi-level subgroups, but it often does so incorrectly.

Conclusion

Both Turbo and Omni are robust models, but Omni is the clear winner here with (on average) superior speed and rate limits and a lower operating cost. It can also handle a much wider range of data inputs and outputs, with Omni’s ability to handle video and images—something Turbo cannot do.

CapeStart’s own testing indicated that Omni outperforms Turbo in cohesiveness, summarization, and categorization (although Omni still has a long way to go until it reaches proficiency in categorization).To dive deeper into the power of large language models (LLMs) and what they can do for your business, contact the AI and machine learning experts at CapeStart. Schedule a one-on-one discovery call and we’ll show you how you can start scaling your innovation and productivity, while lowering costs, with AI right now.

The pace of change in the AI world is staggering, so much so that it’s not always easy to keep up. That’s why we’ve put together this brief guide on the differences between the two newest (as of July 2024) additions to OpenAI’s GPT family: GPT4 Turbo and GPT4o (Omni).

Let’s examine what makes each model useful, how they compare to each other, and some of the tasks they’re best suited to perform.

What is GPT4?

Launched: March 2023

First, let’s quickly review the basics of GPT4 since it’s the bedrock on which those two models are built. GPT4 can process text and images and can better comprehend context, provide more accurate responses, and use reasoning than its predecessor, GPT 3.5. GPT4 is also relatively expensive to operate from a computational perspective.

What is GPT4 Turbo?

Launched: November 2023

GPT4 Turbo features (as its name implies) faster response times using less computing resources than the original GPT4, making it cheaper to run for developers and other users. It has a knowledge cutoff of April 2023 and uses a 128k context window (which OpenAI says is the equivalent of 300 pages of text that can be used in a single prompt).

What is GPT4o (Omni)?

Launched: May 2024

The newest iteration of the GPT4 family, GPT4o, has vastly expanded multimodal capabilities and can process text, audio, images, and video. It can also generate outputs in all four mediums. OpenAI research says GPT4o is much faster than Turbo, can process audio nearly as fast as the human brain, and can remember objects and events.

“GPT-4o reasons across voice, text and vision” explained OpenAI CTO Mira Murati during an online presentation quoted in TechCrunch this past spring. “And this is incredibly important, because we’re looking at the future of interaction between ourselves and machines.”

Performance Comparison: GPT4 Turbo vs. GPT4o

When you get right down to it, comparing GPT4 Turbo vs. GPT4o isn’t that fair of a fight: OpenAI itself says Omni is 2x faster, 50% cheaper, and has 5x higher rate limits than Turbo. Turbo costs $10/1M input tokens and $30/1M output tokens, while Omni costs $5/1M input tokens and $15/1M output tokens.

Omni helps power a vastly improved ChatGPT that can interact naturally using voice interactions. It can also answer direct questions about what’s happening in photos or videos and perform all this in around 50 languages.

Source: OpenAI

Here’s how the two models stack up head-to-head:

|

GPT4 Turbo |

GPT4o |

|

|---|---|---|

| Latency | Higher | Lower |

| Throughput | 20 tokens per second | 109 tokens per second |

| Multimodality | Text, images | Text, images, audio, video |

| Data extraction/classification | Slower and less precise | Faster and more precise |

| Vision evaluation (MMMU) | 63.1 | 69.1 |

| Customer support ticket resolution | 83.33% precision | 88% precision |

| Verbal reasoning | 50% accuracy | 69% accuracy |

SOURCES: klu.ai, Eva Kaushik

While Omni clearly outperforms Turbo in most tasks, both models still need improvement in complex data extraction tasks, word manipulation, spatial reasoning, and pattern recognition.

Interestingly, one analysis showed that while Omni outperformed Turbo in long context utilization—especially at lower context lengths—the two models performed identically at the 2,000 context length and had mixed results at intermediate context lengths. GPT4o was superior in a comparison at higher context lengths of up to 31,500, but only slightly.

CapeStart’s Internal Testing of Turbo vs. Omni

Overall, machine learning experts say Omni is the better choice for applications where fast reasoning and natural language is required, such as chatbots, a conclusion that is supported by CapeStart’s recent internal testing.

Other results of CapeStart’s testing of Omni vs. Turbo:

Cohesiveness: When compared to Turbo, Omni shows better cohesiveness with a better-structured summary.

Summarization: Omni includes numerical values (such as percentage and p-values) in the summary. Turbo does not. This is largely thanks to Omni’s ability to understand table data.

Categorization: Omni can categorize content based on complex custom requests consisting of multi-level subgroups, but it often does so incorrectly.

Conclusion

Both Turbo and Omni are robust models, but Omni is the clear winner here with (on average) superior speed and rate limits and a lower operating cost. It can also handle a much wider range of data inputs and outputs, with Omni’s ability to handle video and images—something Turbo cannot do.

CapeStart’s own testing indicated that Omni outperforms Turbo in cohesiveness, summarization, and categorization (although Omni still has a long way to go until it reaches proficiency in categorization).To dive deeper into the power of large language models (LLMs) and what they can do for your business, contact the AI and machine learning experts at CapeStart. Schedule a one-on-one discovery call and we’ll show you how you can start scaling your innovation and productivity, while lowering costs, with AI right now.